An Intellyx BrainBlog by Jason English

It seems like every vendor is pushing an AI-washed story these days. Generative AI hype is at an all-time high thanks to ChatGPT, Midjourney, and many newer startups and projects. Companies that fear missing out on the next big thing are spending money on AI functionality as a loss leader, or slapping an LLM chatbot in front of their apps.

It makes me wonder: Wait a minute, is this really going to help customers? And, how are we supposed to start making money from so much AI hype?

For most companies, building out a complete AI stack would be prohibitively expensive and resource intensive. Who has the money and resources to literally design their own AI, and how would the new venture make enough money to stay afloat, considering massive R&D and infrastructure costs?

Overinvestment in AI is not the answer

Right now, venture capital is on the hunt for any startup claiming to revolutionize machine learning or use GenAI in some novel way. Tech titans are placing big bets on tools in the sector. Will another project even come close to getting $10 billion dollars for a minority share like OpenAI got from Microsoft?

You never know what might happen up there in the stratosphere. For the rest of our efforts here on Earth, we can run experiments, but we will only be able to meaningfully adopt AI once it provides business value for customers.

Eventually the bills will come due on so many AI projects, and there will be a great reckoning that will divide the products that can find a market niche and help companies capture revenue, from those that won’t.

The cost of infrastructure will get really high, really fast, as will the cost of GPUs, data ingress/egress, and architecture. Companies large and small will struggle finding any experienced AI modeling experts or machine learning data scientists willing to start working on yet another project.

A supply chain of loosely coupled composite AI applications

If enterprises want to see a return on their AI investments, they must prove that their chosen strategy is applicable to real-world business and societal problems, rather than serving as window dressing.

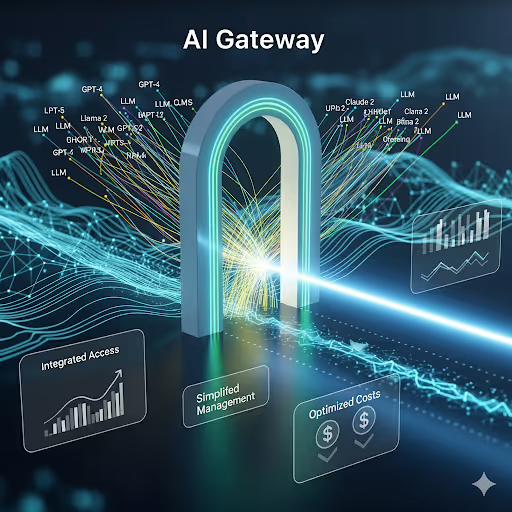

Since we can’t depend on just one form of AI, to get there, we’ll need composite AI – a supply chain consisting of multiple AI suppliers, with multiple models and multiple training data sets working together in a loosely-coupled fashion, based on the right fit for the job.

Much like any other industry, from producing cars to sneakers, manufacturing will collaborate with specialized supply chain and logistics partners to fulfill customer demand—there will be a supply chain for assembling composite AI-based applications from model components.

AI producers and consumers need a multi-party interface to flexibly associate with each other. Since each provider would need to interface with others, the interface between partners would likely be expressed as an API, but with specifications for the workload at hand.

Metering is the dialtone of composite AI apps

Just like any SaaS platform or app on your smartphone, when you use AI-driven applications, you are entering into a partnership with the vendor as well as underlying AI providers—though the contracts may be represented as APIs rather than formal terms of service.

How can we manage the ground rules of AI services so everyone gets paid enough to continue improving quality and performance, while still delivering for customers without surprise billing or functional failures? It’s a huge problem, and current approaches leave the wires hanging, with real customer service and liability implications on the line.

The AI supplier pays for the non-trivial initial cost of curating ML data, training the model, and making it ready for consumption through an API or an interface.

AI suppliers that don’t bill for usage would still need to know what consumers are doing with their AI-based application—even if the only point of doing so would be to justify the investment for community utility and business partners.

We can solve this problem with better AI metering, kind of like we used to do with telco providers for decades. The party on either end of a call would buy their own local telephone infrastructure with flat rates for local calls, while the long distance or international calling service would handle the remote connection on their own network, billing the sender or receiver of the call by the connection, or by the minute.

AI inference models, LLMs and feature libraries are hosted in data centers and cloud hyperscalers around the world, as reserved instances or ephemeral microservices and Lambda functions. Wherever they reside, AI metering acts like a common ‘dialtone’ for AI-enabled applications to measure, assemble and run workloads.

Bringing AI metering out for distribution

Major cloud marketplace aggregators like Salesforce, Azure and AWS are well known for offering huge catalogs of subscription-based application services and tools, including AI services, but the measurement of usage and billing generally occurs within the confines of that aggregator’s account interface.

Enterprises that deliver applications have gotten used to applying various forms of observability and monitoring to try and understand usage, but once logs are recorded, they are already history.

To build composite AI apps, we need a way to understand exactly how a mix of models are invoked and executed to contribute to each end result.

Take for instance a financial advisory composite AI app. When an investment client asks for some recommendations, the advisor asks the app through a conversational LLM for value predictions based on 6, 12, and 24-month horizons. The LLM then invokes one AI service for risk profiling, another for comparing asset categories, and yet another service that looks at trendlines. Still another GenAI tool may generate a client report out of all of the results.

Each model invocation in the chain is metered, so the company can determine which models are proving valuable to customers, and how to compensate all of the providers or check their work.

Metering of AI models doesn’t have to work any differently than metering API-based services. That’s why Amberflo offers a Github metering repo of useful scripts and metering SDKs for modern development platforms like Go, Python and Java, and plugins for API gateways such as Kong.

The Intellyx Take

Metering is the telemetry of AI monetization and utility. It gives us insight into exactly how substrate algorithms, inference models and applied processes contribute value to composite AI applications.

Think about the alternative–not metering AI would leave all providers and consumers helpless, in a multi-party chain dependent on logs and other artifacts and no common understanding of usage.

Therefore, metering should be embedded within every API service producer interface and REST-style call to a feature, as well as within the consuming AI-driven application itself.

Capturing real-time and historical usage metering will make AI a valuable contributor to critical applications, instead of a cost sinkhole.

©2024 Intellyx B.V. Intellyx is editorially responsible for this document. No AI bots were used to write this content. At the time of writing, Amberflo is an Intellyx client.

.svg)