Enterprises today are moving fast to adopt Generative AI and Large Language Models (LLMs). But as adoption accelerates, teams often find themselves tangled in a mess of fragmented APIs, complex integrations, and runaway costs.

Amberflo is here to change that.

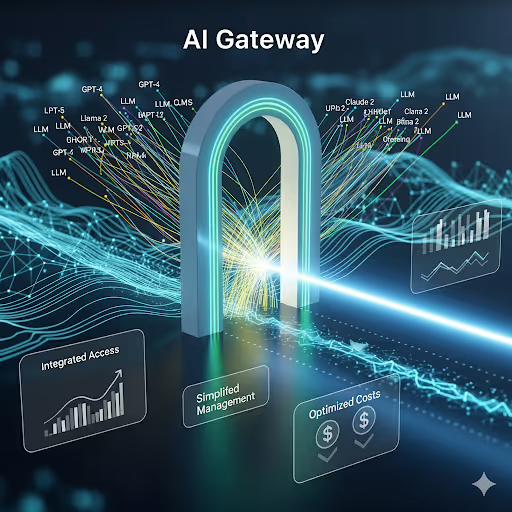

We’re excited to introduce the Amberflo AI Gateway, a powerful new service that brings together universal LLM access, including custom built (distilled) LLM models, enterprise-grade governance, and real-time FinOps capabilities into one unified platform. With it, you can simplify AI integration, eliminate waste, and optimize spend—turning AI rollout into a true driver of business value.

Why a Gateway for AI & LLM?

Managing multiple LLM providers—OpenAI, Anthropic, AWS Bedrock, Google VertexAI, Cohere, Hugging Face, and dozens more—means juggling different APIs, authentication methods, logging formats, and cost structures. That complexity creates friction, slows down innovation, and makes it almost impossible to get a real-time view of usage and costs.

Additionally, many enterprises are distilling LLMs with their own private datasets to create their own distilled LLM models. Enterprises run them inside their own VPC or on-prem GPU clusters to control data flow and costs. Usage Metering, Cost Rating and Allocation of these models is hard since they sit outside of model provider's billing framework.

Amberflo AI Gateway solves this problem by delivering a single, secure interface to 100+ models. All interactions use the familiar OpenAI API format, so your developers write once and run anywhere. At the same time, finance and operations teams gain deep visibility into usage and costs, with controls to keep AI adoption efficient and accountable.

What Makes Amberflo AI & LLM Gateway Different?

Most gateways on the market stop at routing traffic—they’re little more than API proxies. Amberflo AI Gateway is different: it’s built directly into the Amberflo Enterprise FinOps for AI Platform, and comes with a full set of observability and FinOps features baked in.

That means the gateway is not just a governance and centralized LLM access point, but also a cost-management FinOps engine for all your AI workloads. Key capabilities include:

- AI Metering: Real-time aggregation of input/output tokens, requests, and responses across all LLMs, tagged by team, app, or department.

- LLM Cost & Rating: Apply list prices or custom internal rates instantly as usage flows through the gateway.

- Cost Guards & Alerts: Set thresholds by model, app, or version with real-time notifications.

- Chargeback & Billing: Allocate costs with advanced rules, free tiers, overages, and budgets.

- Workload Planning: Access a CPQ-style tool with full catalog of models, prices, and usage projections to guide budgeting and forecasts.

Benefits for Your Business

The Amberflo AI Gateway isn’t just about simplifying integration—it’s about enabling enterprises to move faster, with confidence, and at lower cost.

- Accelerate Development: Eliminate months of integration work; deploy new models the day they’re released.

- Reduce Complexity: Standardize logging, authentication, and error handling across all models.

- Optimize Costs: Track granular usage data, enforce budgets, and compare costs across providers.

- Scale with Confidence: Handle variable workloads with built-in load balancing, fallbacks, and rate controls.

Designed for the Enterprise

Amberflo AI Gateway was built with enterprise-grade requirements in mind:

- Universal Access: 100+ model providers supported, with consistent OpenAI-format responses.

- Reliability: Load balancing, failover support, and health monitoring built in.

- Security & Governance: Virtual keys, fine-grained access control, custom authentication, and complete audit trails.

- Observability: Multi-platform logging, Prometheus metrics, dashboards, and API callbacks.

- Deployment Flexibility: Run it in your cloud VPC or on-prem data center for maximum control.

Real-World Use Cases

- Deployment of Distilled Models: Meter, Track, Define Rates, Allocate Costs, and Optimize your own inference models.

- Platform Teams: Standardize model access across development groups.

- AI Applications: Build assistants, chatbots, and content tools that seamlessly switch between providers.

- FinOps & Finance: Control AI spend at scale with detailed analytics and cost allocation.

- Multi-Model Workflows: Run different models for different tasks—all through one interface.

Get Started Today

Enterprises don’t have to choose between innovation and control. With Amberflo AI Gateway, you can have both.

Contact us to start your free trial today.

.svg)